Dataflow How to Tell Which Machine Type Is Used

Using dataflow you can create different dataflow processes to run the ETL anytime you want and store it in a different output table each time. You can cancel your streaming job from the Dataflow Monitoring Interface or with the Dataflow Command-line Interface gcloud dataflow jobs cancel command.

Process Model For A Machine Learning Application Data Flow Diagram Download Scientific Diagram

Need to create a service account so that when you run the application from your local machine it can invoke the GCP dataflow pipeline with owner permissions.

. GCP Storage Buckets Service Account. Decision nodes and data flow nodes. We can use one type of decision node to describe all the types of control in a sequential program.

To create your AutoML model simply select the dataflow entity with the historical data and the field with the values you want to predict and Power BI will suggest the types of ML models that can be built using that data. Dataflows are available as part of these services plans. To a create a job the rolesdataflowadmin role includes the minimal set of permissions required to run and examine jobs.

LEt Dataflow Solve all your problems. In a basic CDFG we have two types of nodes. From right to left or from top to bottom.

The default machine types for Dataflow are n1-standard-1 for batch n1-standard-2 for jobs that use Streaming Engine and n1-standard-4 for jobs that do not use Streaming Engine. Assign out a. If you have dataflows A B and C in a single workspace and chaining like A B C then if you refresh the source A the downstream entities also get refreshed.

You can use any of the available Compute Engine. The ID of your Google Cloud project. Streaming pipelines do not terminate unless explicitly cancelled by the user.

In a classical von Neumann machine there is the concept of sequential flow of control and an operation ie. You can overwrite it. A data flow node encapsulates a complete data flow graph to represent a basic block.

The Compute Engine machine type that Dataflow uses when starting worker VMs. Researchers at Microsoft Semantic Machines are taking a new approach to conversational AImodeling dialogues with compositional dataflow graphs. In this article we are going to understand what the Power BI Dataflows is all about and how we can get started by building dataflows in Power BI Service.

Your script then connects to the data source you specified. Using these tools they bring the models of Data-scientists into production. Creating an AutoML model.

A dataflow can simply be considered as an extract transform and load pipeline that can be used to connect to source data transform the data by applying. Dataflow refers to the flow of data throughout the runtime of any program. After creating a model on the local machine it.

Learn how the framework supports flexible open-ended conversations and explore the dataset and leaderboard. It can also be termed as a Streaming process. Dataflow although not built for that purpose can do that for you.

The following table describes dataflow features and their. The rolesdataflowdeveloper role to instantiate the job itself. Paste the copied query into the blank query for the dataflow.

Dataflow can be used for versioning the data from the source into multiple destination tables. Open the Power BI dataflow and then select Get data for a blank query. Dataflow modeling describes hardware in terms of the flow of data from input to output.

Most dataflow capabilities are available in both Power Apps and Power BI. A CDFG uses a data flow graph as an element adding constructs to describe control. Search for Data Flow in the pipeline Activities pane and drag a Data Flow activity to the pipeline canvas.

You can set the custom machine type for a dataflow operation by specifying the name as custom-- But that answer is for the Java Api and the old Dataflow version not the new Apache Beam implementation and PythonReviews. Select the new Data Flow activity on the canvas if it is not already selected and its Settings tab to edit its details. The pipeline runner that executes your pipeline.

The Dataflow service determines the default value. Dataflow in LabView programming determines the order of execution ie. Checkpoint key is used to set the checkpoint when data flow is used for changed data capture.

Programmable computers with hardware optimized for fine grain data-driven parallel computation fine grain. The LabView programming works on many operating systems such as Windows Mac OS or Linux. Dataflow machine A computer in which the primitive operations are triggered by the availability of inputs or operands.

The principles and complications of. Dataflow machines are programmable computers of which the hardware is optimized for fine-grain data-driven parallel computation. Alternatively the following permissions are required.

Data-scientists use Python with its excellent libraries. To execute your pipeline using Dataflow set the following pipeline options. Streaming jobs use a Google Compute Engine machine type of n1-standard-2 or higher by default.

A Cloud Storage path for Dataflow to stage most temporary files. The following list shows which connectors you can currently use by copying and pasting the M query into a blank query. Dataflow capabilities in Microsoft Power Platform services.

Next Power BI analyzes the other available fields in the selected entity to suggest the input. By contrast in a dataflow machine there is a flow of data values from. Instruction is performed as and when flow of control reaches that operation.

At the instruction granularity data-driven. Java Scala own Scheduler etc. However if you refresh C then youll have to refresh others independently.

Activated by data availability only data dependences constrain parallelism programs usually represented as graphs. An introduction to Power BI Dataflows. The rolescomputeviewer role to access machine type information and view other settings.

According to Is it possible to use a Custom machine for Dataflow instances. For example to describe an AND gate using dataflow the code will look something like this. Some dataflow features are either product-specific or available in different product plans.

For Google Cloud execution this must be DataflowRunner. Setting required options.

Data Flow Diagrams Explained Biro Administrasi Registrasi Kemahasiswaan Dan Informasi

Data Flow For Process Control Download Scientific Diagram

Em 4 Data Flow Machine Computer Museum

Suggested Data Flow And Mqtt Based Protocol For Transmission Of Ml Download Scientific Diagram

A Conceptual Representation Of The Data Flow Computer Architecture The Download Scientific Diagram

Approaches For Data Flow Diagrams Dfds A Dfd Representations B Download Scientific Diagram

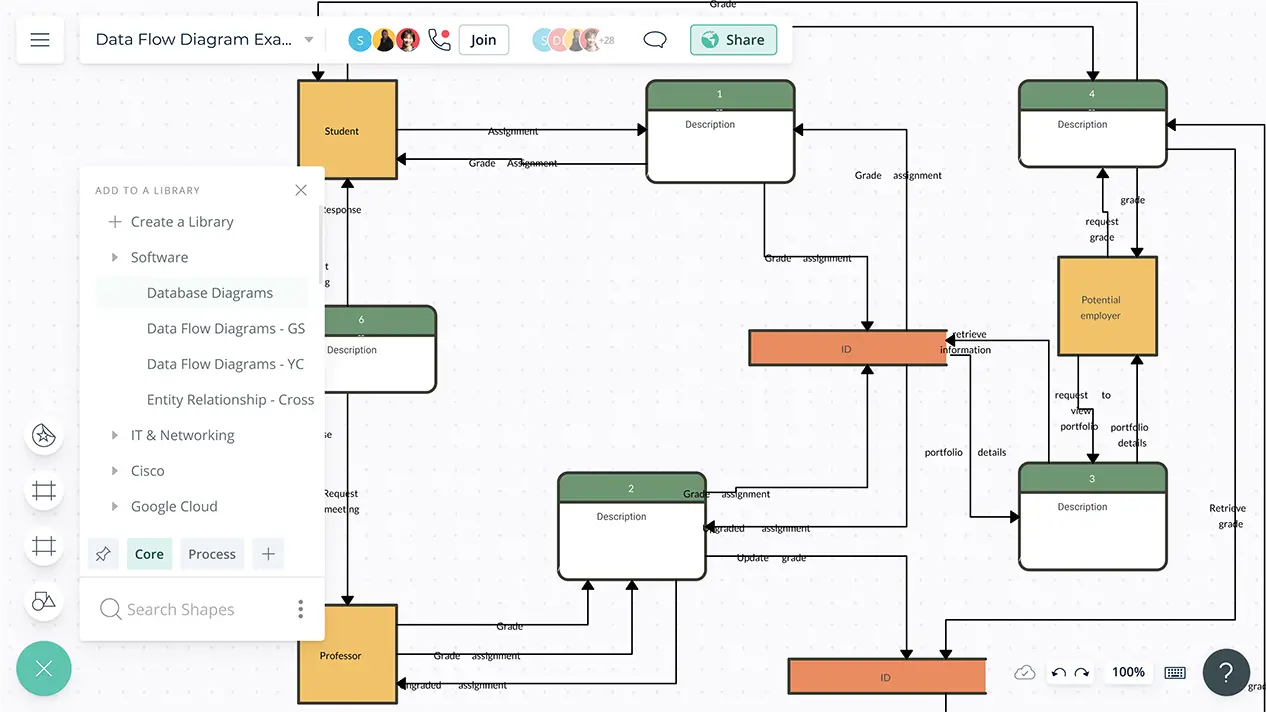

Data Flow Diagram Online Dfd Maker Tips And Templates Creately

8ms Ishikawa Diagram Effect Cause Category Problem Solving Activities Ishikawa Diagram Data Flow Diagram

Data Flow Diagram Exle Library Management System Data Flow Diagram Flow Chart Template Flow Chart

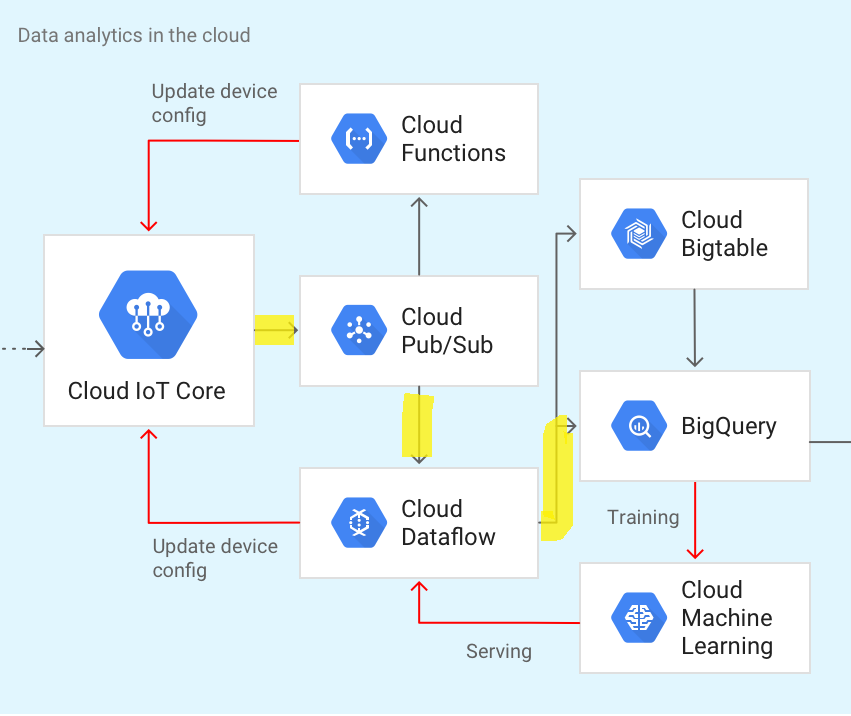

How To Create Dataflow Iot Pipeline Google Cloud Platform By Huzaifa Kapasi Towards Data Science

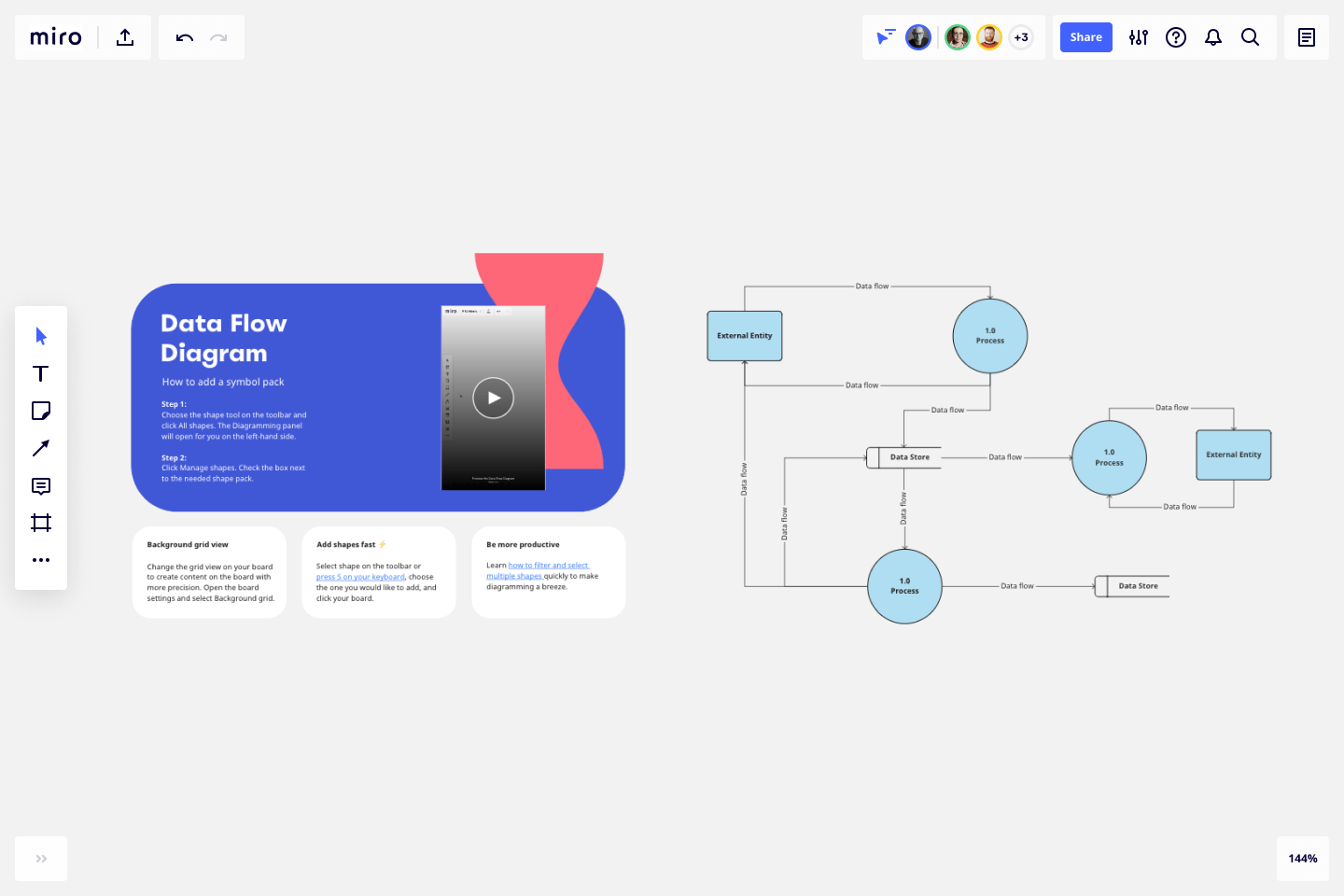

Data Flow Diagram Online Dfd Template Miro

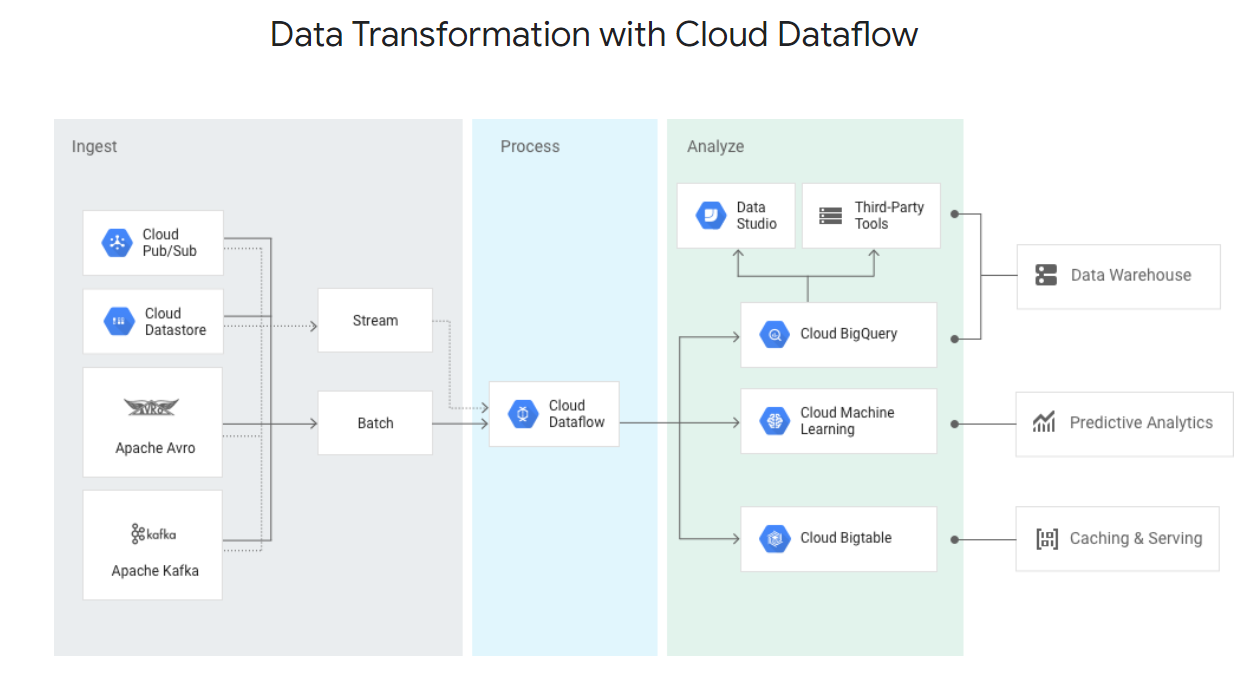

Coding A Batch Processing Pipeline With Google Dataflow And Apache Beam By Handerson Contreras Analytics Vidhya Medium

Pin By Meera Academyy On Uml Use Case Web Design Tips Data Flow Diagram

Ai Data Mining Data Mining Machine Learning Deep Learning

A Data Flow Diagram Showing The Steps That The Framework Goes Through Download Scientific Diagram

Data Flow Diagram Staruml Documentation

Data Flow Diagram Between User And Administrator Download Scientific Diagram

How To Use Google Cloud Dataflow With Tensorflow For Batch Predictive Analysis Google Cloud Big Data And Machine Learning Machine Learning Models Predictions

Comments

Post a Comment